Abstract

This project investigates the correlation between self-reported emotional states and observed behaviors and physiological signals in personalized environment control rooms. Participants undergo emotional assessments using PANAS questionnaires before and after experiencing various room conditions. During their time in the room, activities are recorded via video and EEG signals, while facial emotion detection and human pose estimation techniques are employed to analyze behavior. The aim is to identify optimal room configurations that induce relaxation and reduce stress levels based on individual emotional responses. Results demonstrate the potential for tailored interventions to enhance stress reduction experiences in controlled environments.

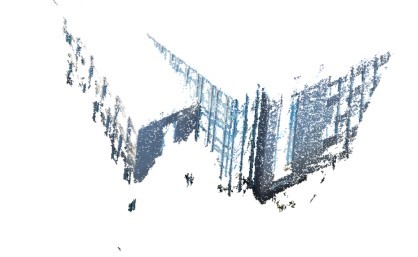

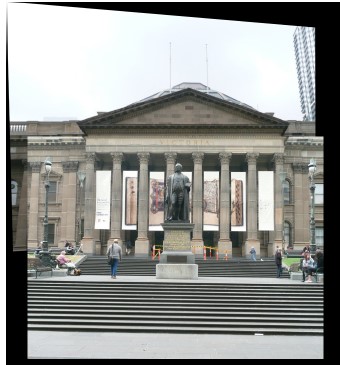

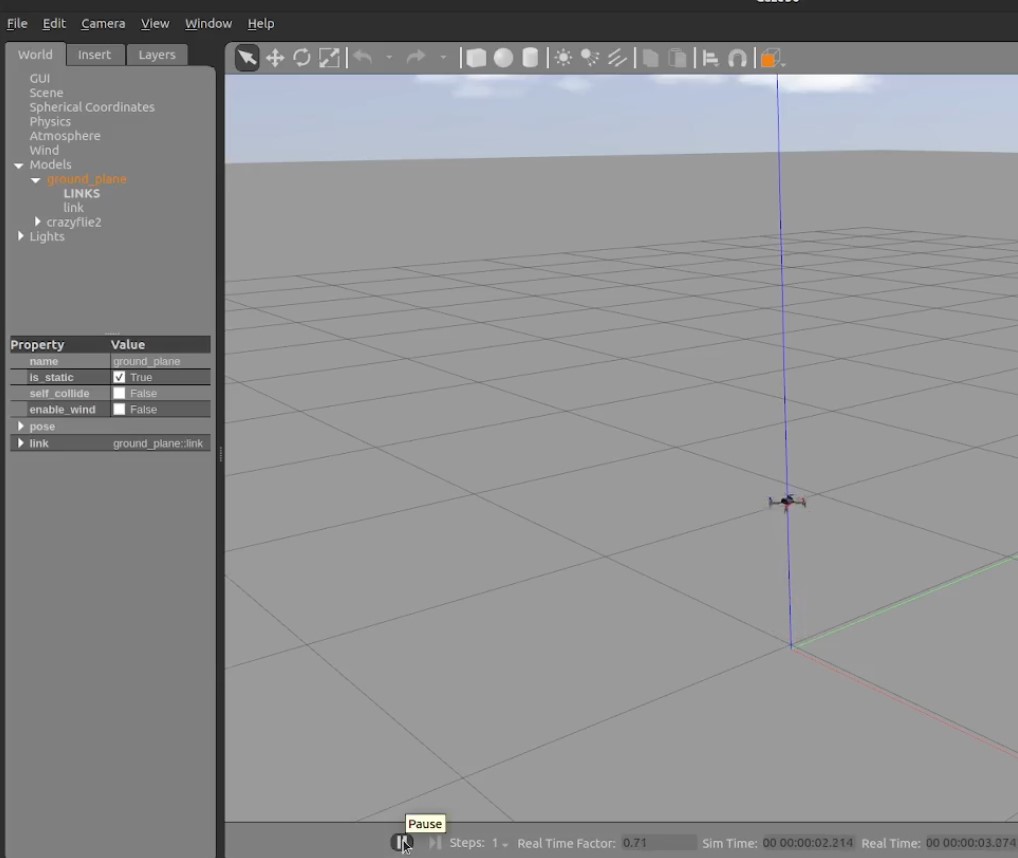

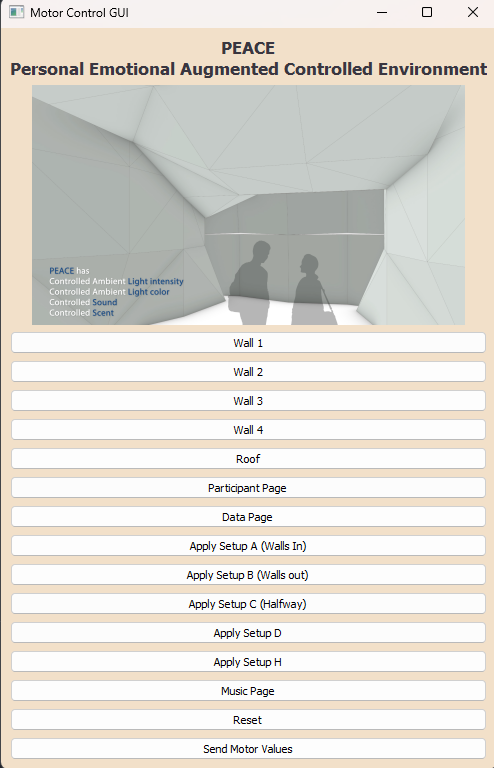

Personalized Environmental Control Room

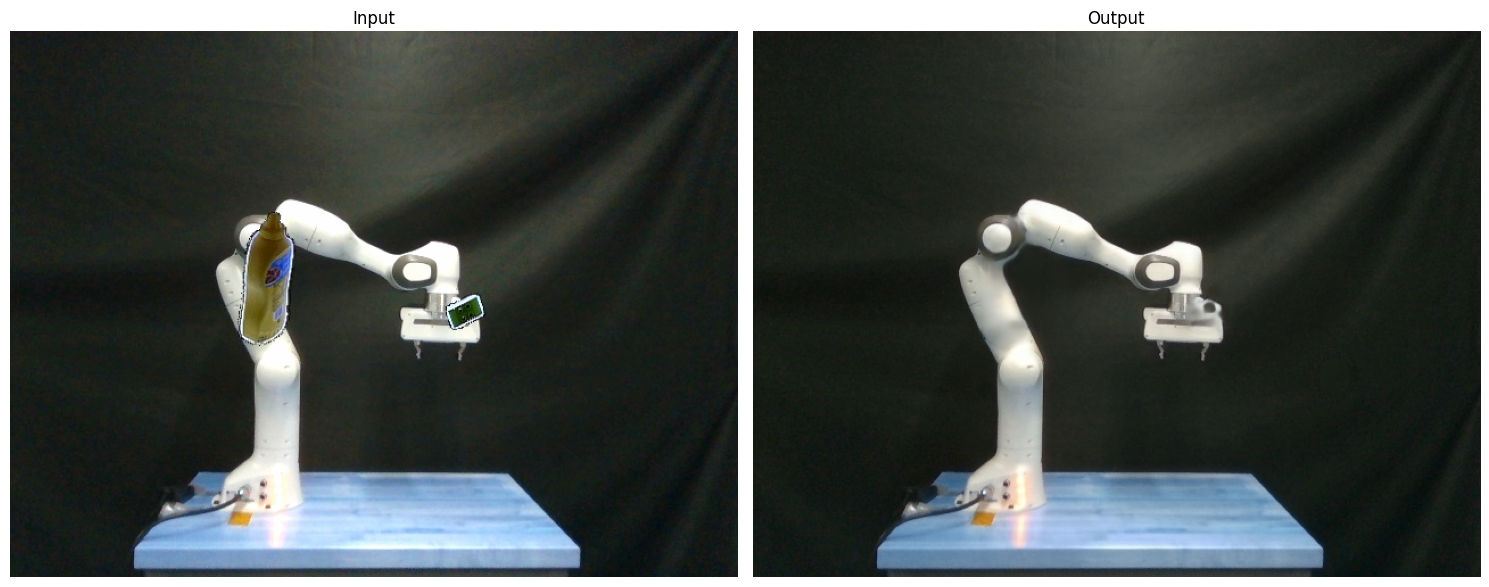

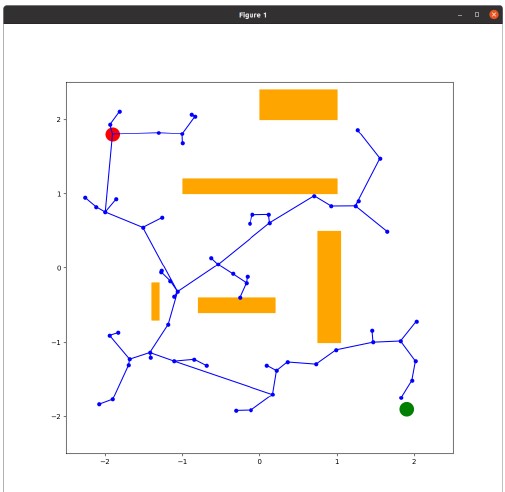

Previously recorded Human Pose Estimation

GUI for integration of all sensors

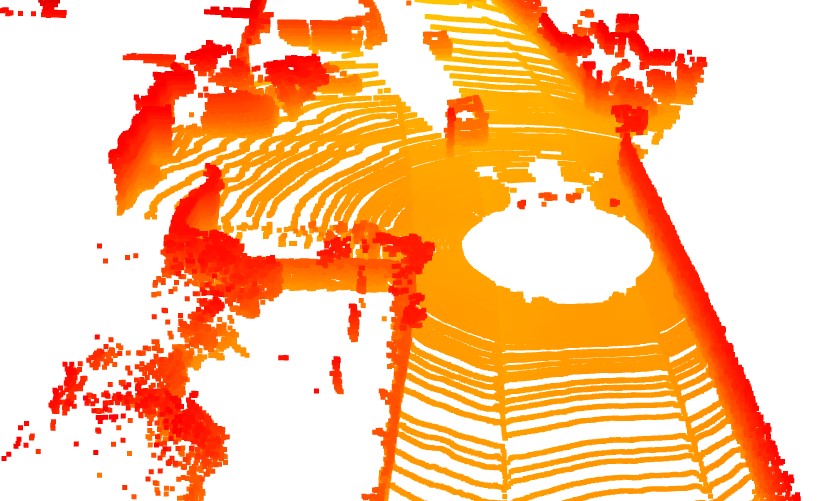

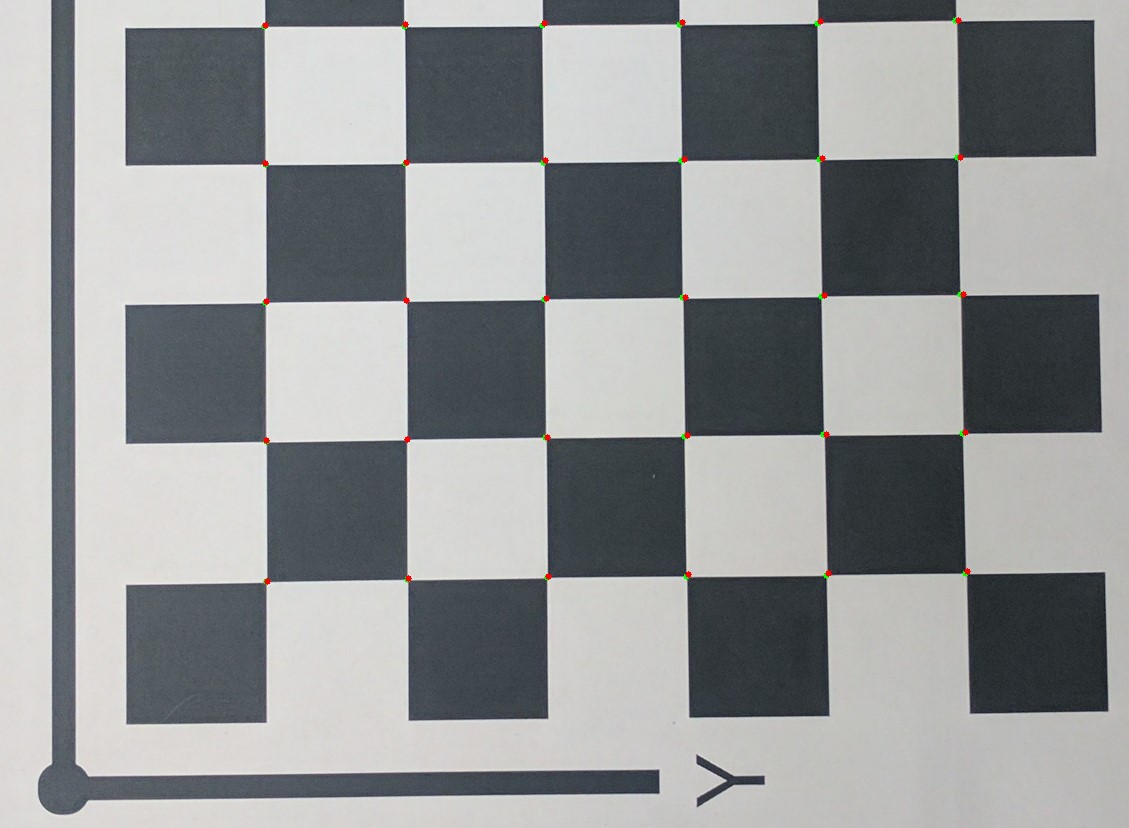

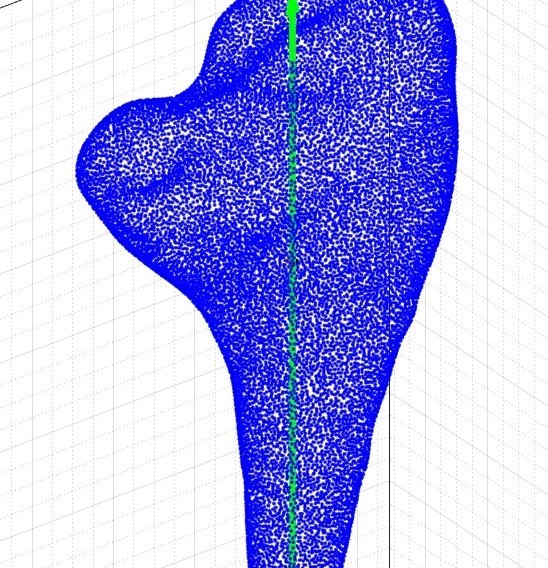

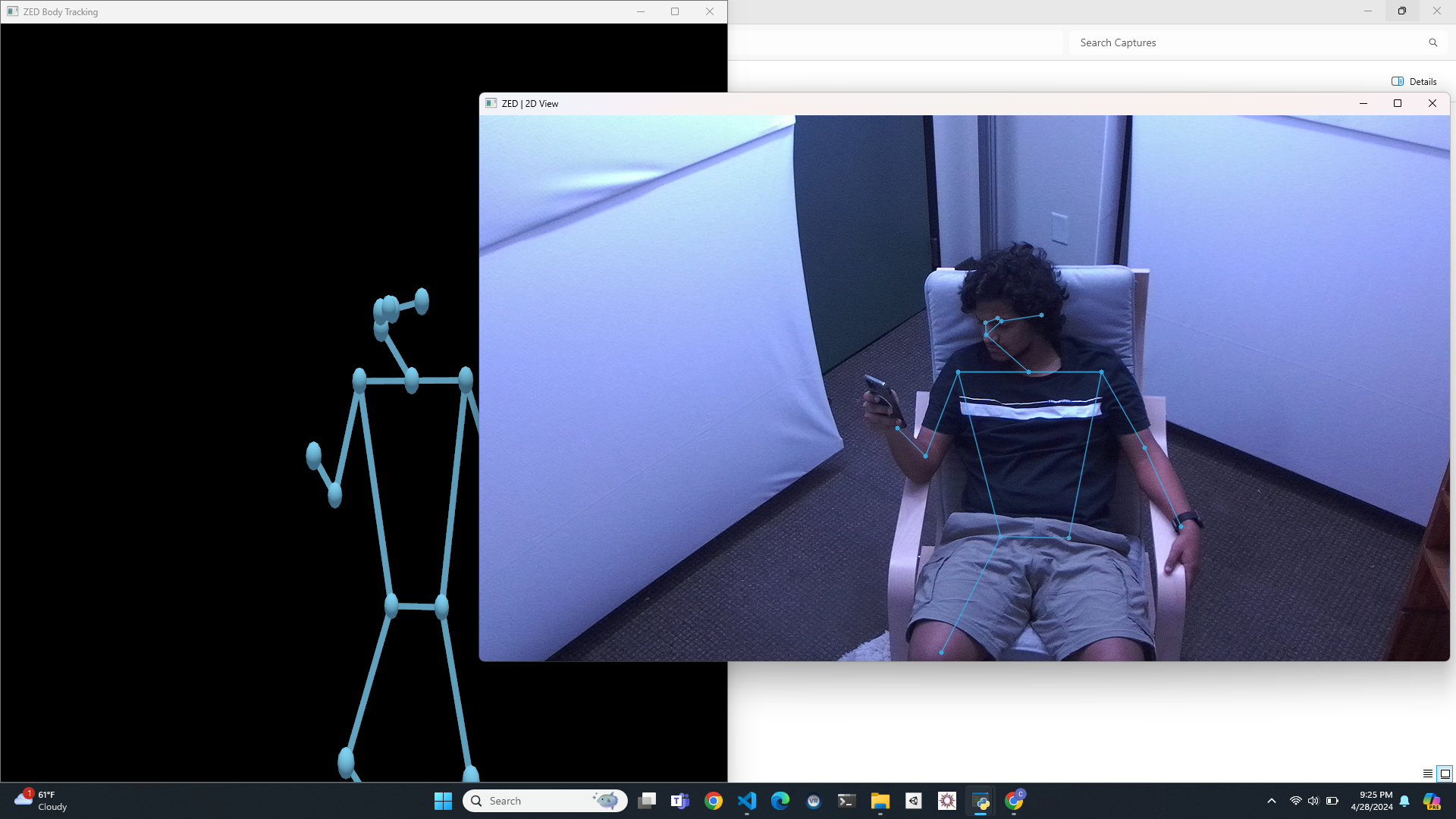

Human Pose Estimation using Zed 2i Camera

Introduction

This project explores the correlation between changes in environmental factors and human emotions by analyzing data from a personalized environment control room. Utilizing a questionnaire (Positive and Negative Affect Schedule - PANAS) and audio recordings, participants' emotional states are assessed before and after their experiences in the room. Various sensors, including EEG and video capture, monitor participants' responses, while interactive activities aim to reduce stress levels. Through facial emotion detection and human pose estimation, the project aims to map these physiological responses to emotional changes indicated by PANAS scores. The ultimate objective is to identify room conditions that induce relaxation and reduce stress for individuals based on their initial emotional states.

Understanding the impact of environmental stimuli on human emotions is crucial for designing spaces conducive to well-being and productivity. This project investigates the complex relationship between environmental factors and emotional responses through a controlled experiment conducted in a personalized environment control room. By integrating multi-modal data collection techniques and advanced analytics, the study aims to uncover patterns linking environmental adjustments to changes in emotional states.

Problem Statement

Despite advancements in sensor technology, accurately quantifying human emotions in real-time remains challenging. Traditional machine learning approaches are hindered by the lack of labeled data for emotion recognition, necessitating innovative methods that can capture emotional dynamics without relying on pre-defined categories. Additionally, noise and occlusions in sensor data, such as those from facial and pose detection systems, further complicate emotion inference. This project seeks to address these challenges by developing unsupervised learning algorithms capable of discerning emotional patterns from multi-modal sensor data, thereby facilitating the identification of environmental conditions conducive to relaxation.

Methodology

The project employs a combination of data collection methods, including PANAS questionnaires, audio recordings, video capture, EEG sensors, and environmental control interfaces. Facial emotion detection and human pose estimation algorithms analyze video data, while unsupervised learning techniques, such as CNN and LSTM autoencoders, process keypoint information extracted from pose data. Gaussian Mixture Models (GMM) and Kalman filtering are utilized to mitigate noise and enhance the accuracy of keypoint detection. Augmentation techniques are applied to address data scarcity and preserve spatial constraints inherent in keypoint representations. Integration challenges, such as interfacing the Zed 2i camera with the graphical user interface (GUI), are also addressed to ensure seamless data acquisition.

Data Collection Methods:

The project employs a comprehensive approach to data collection, utilizing various instruments to capture different aspects of participant experiences. The PANAS questionnaire provides self-reported emotional states, while audio recordings offer additional insight into vocal cues and tonal variations. Video capture serves to visually record participants' behaviors and expressions, while EEG sensors monitor physiological responses associated with emotional arousal. Environmental control interfaces allow researchers to manipulate factors such as lighting, music, and spatial layout, enabling precise control over experimental conditions.

Facial Emotion Detection and Human Pose Estimation:

Advanced algorithms for facial emotion detection and human pose estimation play a crucial role in analyzing video data. These algorithms automatically identify facial expressions and body postures, providing quantitative measures of emotional responses and physical activities. By extracting keypoint information from video frames, researchers can track participants' movements and facial expressions over time, facilitating the analysis of emotional dynamics during their interaction with the environment.

Noise Mitigation and Data Augmentation:

To address noise and enhance the accuracy of keypoint detection, Gaussian Mixture Models (GMM) and Kalman filtering techniques are applied. GMM helps identify outliers and suppress noise in keypoint data, while Kalman filtering provides a recursive estimation of keypoint trajectories, improving tracking accuracy. Augmentation techniques are utilized to expand the dataset and preserve spatial constraints inherent in keypoint representations. These augmentation strategies ensure robust model training and generalization, despite limited data availability.

Integration Challenges:

Integration efforts are focused on overcoming technical hurdles, such as interfacing the Zed 2i camera with the graphical user interface (GUI). Seamless integration of hardware and software components ensures smooth data acquisition and real-time monitoring of participant interactions. By addressing integration challenges, researchers can streamline the experimental process and facilitate comprehensive data analysis, ultimately advancing our understanding of the relationship between environmental stimuli and human emotions.

Results

The preliminary findings of the project present compelling evidence of correlations between environmental adjustments and changes in participant emotions. Through meticulous analysis of multi-modal sensor data, including inputs from PANAS questionnaires, audio recordings, video capture, EEG sensors, and environmental control interfaces, researchers have begun to uncover intricate relationships between environmental stimuli and emotional responses.

One key takeaway from the initial analysis is the potential of developed unsupervised learning algorithms in capturing nuanced emotional dynamics. These algorithms, trained to discern patterns within the diverse streams of sensor data, exhibit promising capabilities in identifying subtle shifts in emotional states. Despite the presence of noise and occlusions inherent in real-world data, the algorithms demonstrate robustness in detecting and interpreting emotional cues, contributing to the reliability of emotion inference.

To further enhance the effectiveness of emotion inference models, ongoing efforts focus on refining data processing techniques. Augmentation strategies are being explored to expand the dataset and improve model generalization. By synthetically generating additional data points, researchers aim to address data scarcity issues and enhance the robustness of trained models. Additionally, sensor fusion approaches are being investigated to integrate information from multiple modalities, such as facial expressions, body movements, and physiological signals, into a cohesive framework for emotion analysis.

Integration efforts are also underway to streamline data collection and analysis processes. Challenges related to interfacing hardware components, such as the Zed 2i camera, with the graphical user interface (GUI) are being addressed to ensure seamless data acquisition. By optimizing data collection workflows and enhancing system interoperability, researchers aim to facilitate comprehensive insights into the complex interplay between environmental factors and human emotions.

Overall, the preliminary findings underscore the potential of leveraging multi-modal sensor data and unsupervised learning techniques to gain deeper insights into the relationship between environmental stimuli and human emotions. As the project progresses, continued refinement of data processing methods and integration efforts will contribute to the development of robust emotion inference models, ultimately informing the design of personalized environments conducive to emotional well-being and stress reduction.