Abstract

Accurate ego-motion estimation is a crucial capability for autonomous robots and vehicles operating in GPS-denied environments. This project presents a robust and efficient Visual Inertial Odometry (VIO) system that fuses measurements from a stereo camera and an inertial measurement unit (IMU) to estimate the 6DOF pose of the platform. The proposed solution employs a novel filtering approach called the Multi-State Constraint Kalman Filter (MSCKF), which tightly integrates the visual and inertial data streams within a computationally tractable recursive estimation framework. By exploiting the complementary strengths of both sensing modalities, our VIO system achieves precise localization robust to challenging perceptual conditions such as rapid motions, featureless environments, and varied lighting conditions. Extensive evaluations on the EuRoC dataset demonstrate the superior accuracy and efficiency of our approach compared to existing state-of-the-art VIO methods.

Introduction

Robust localization is an essential requirement for autonomous robots and vehicles operating in GPS-denied environments such as indoors, urban canyons, or underground mines. In such scenarios, visual and inertial sensors provide a compelling alternative to global positioning systems for tracking the 6DOF pose (position and orientation) of a mobile platform. Visual odometry techniques estimate motion by detecting and tracking visual features across consecutive camera frames, while inertial sensors directly measure the platform's linear accelerations and angular velocities.

While visual odometry offers accurate pose updates when sufficient visual features are available, it remains susceptible to failure in poorly textured environments or during periods of rapid motion when visual tracking becomes unreliable. Conversely, inertial navigation relies on numerical integration of noisy sensor data, causing rapid drift in estimated poses over time. By tightly integrating these complementary sensing modalities, Visual Inertial Odometry (VIO) can leverage their respective strengths to yield a robust and drift-free localization solution suitable for long-term autonomous operation.

Previous Methodologies

Traditional VIO methods can be broadly classified into two main categories: filtering-based and optimization-based approaches. Filtering methods, such as the Extended Kalman Filter (EKF), maintain a probabilistic state estimate that is recursively updated as new sensor measurements arrive. While computationally efficient, the standard EKF formulation linearizes the system dynamics around the current state, introducing errors that can lead to inconsistent and divergent state estimates.

In contrast, optimization-based techniques frame VIO as a nonlinear least-squares problem and solve for the maximum a posteriori (MAP) estimate of the camera poses and scene structure directly, using iterative minimization methods. While achieving higher accuracies, these batch optimization methods scale poorly to large problems due to increasing computational load and memory footprint as more measurements are processed.

Proposed Approach

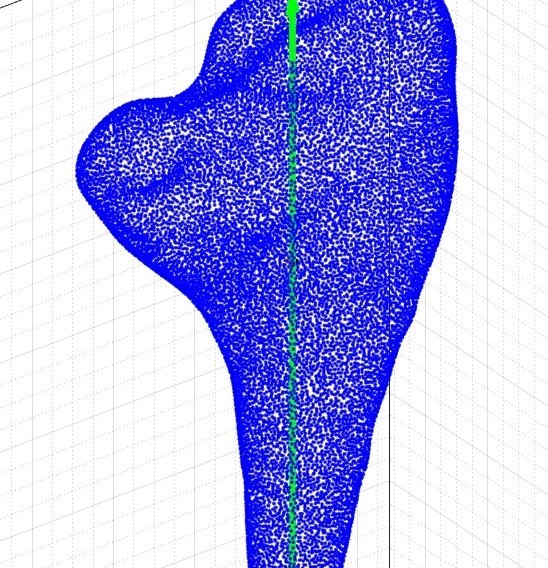

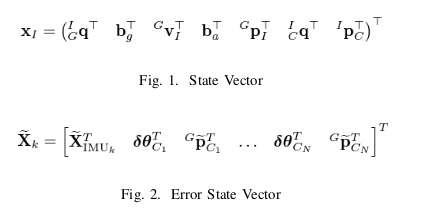

Our VIO system employs the Multi-State Constraint Kalman Filter (MSCKF), a novel filtering strategy that combines the efficiency of recursive estimation with the accuracy of full-state nonlinear optimization. The MSCKF maintains a sliding window of recent camera pose states that are constrained by both inertial measurements and visual feature observations. By applying appropriate motion model constraints within the filter, the MSCKF formulation avoids accumulating linearization errors over time while remaining computationally tractable.

The core components of our VIO pipeline are:

-

IMU Preprocessing:

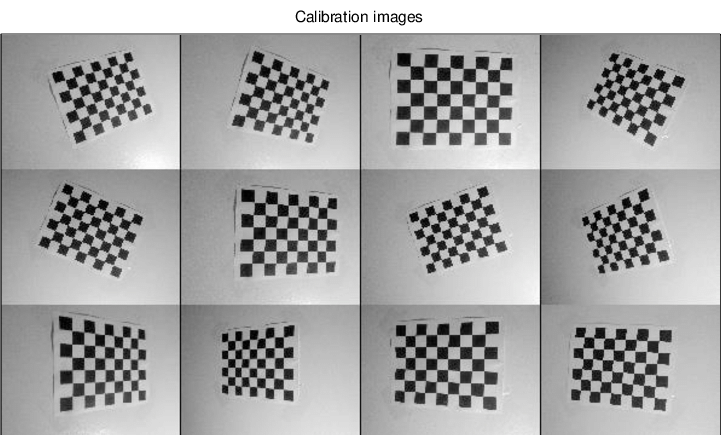

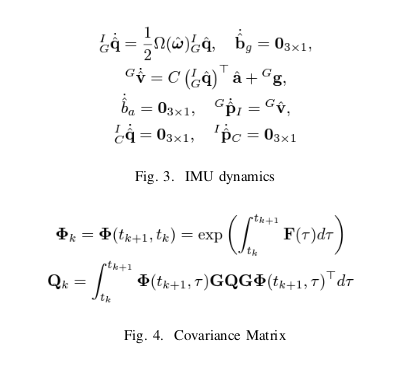

Raw IMU data is calibrated and bias-corrected to improve downstream state estimation accuracy. -

Feature Detection & Tracking:

Visual features are detected in the stereo image stream and robustly tracked across frames using ORB descriptors and an outlier rejection scheme. -

State Augmentation:

When new stereo frames arrive, the MSCKF state vector is augmented with states representing the new camera pose and any newly observed 3D features. -

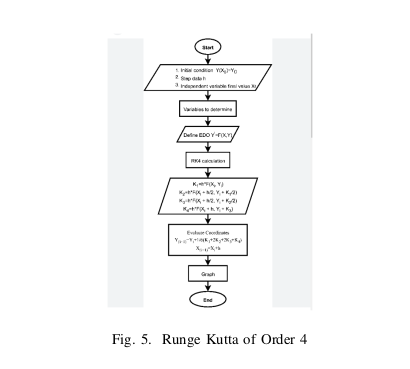

MSCKF Prediction:

Between camera frames, batches of IMU data are processed to propagate the filter state using an inertial error-state motion model. -

MSCKF Update:

Observations of tracked visual features are used to correct the predicted state via an EKF update, ensuring accurate 6DOF pose tracking over time.

Results:

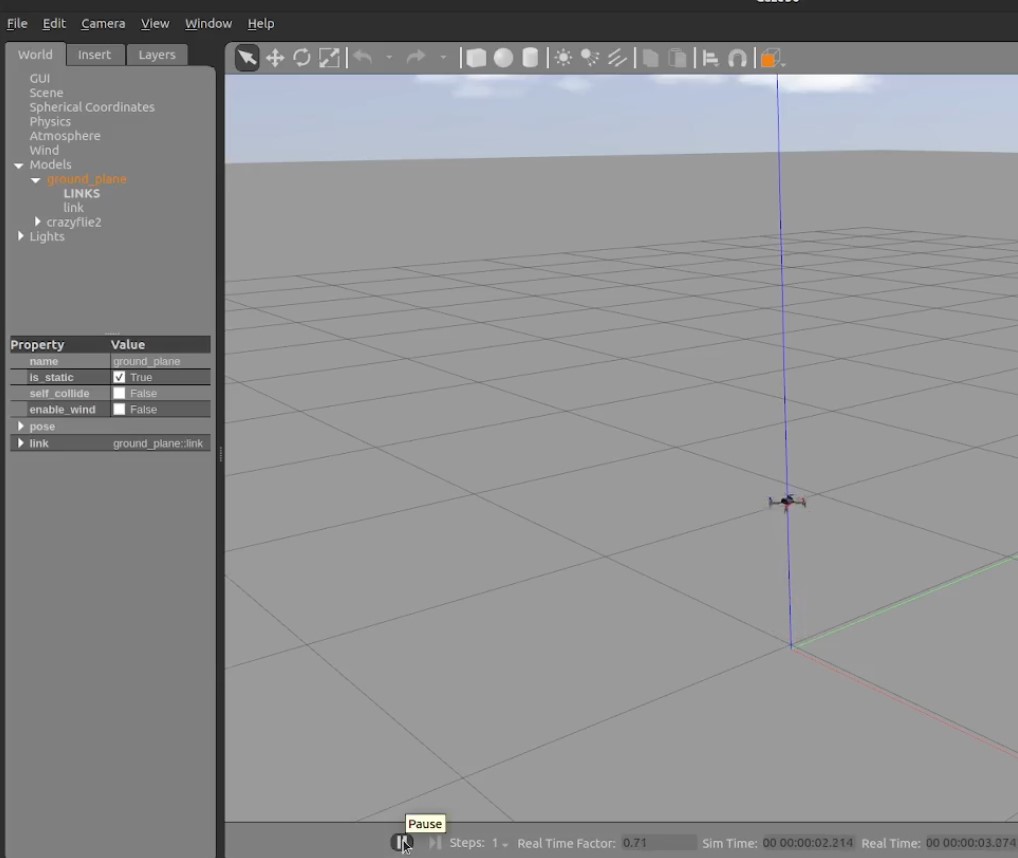

We evaluated our VIO system on the EuRoC MAV dataset, which contains synchronized stereo camera images and IMU data collected onboard a micro aerial vehicle, along with accurate ground truth poses from a VICON motion capture system.

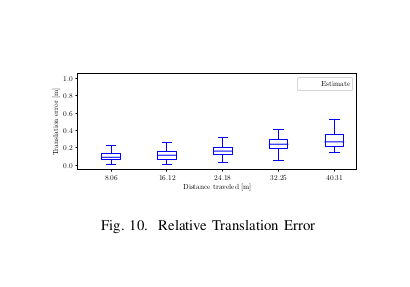

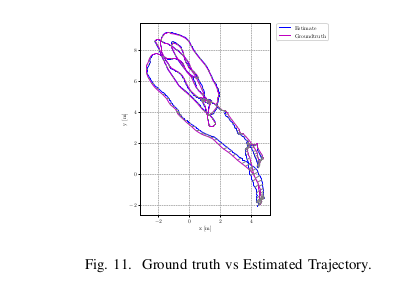

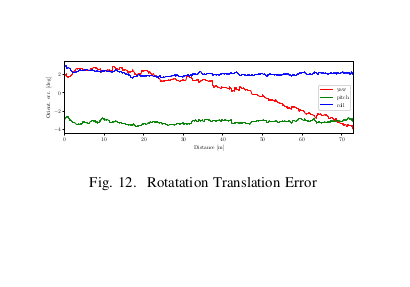

On the challenging machine hall (MH) sequences, our MSCKF-based VIO implementation achieved impressive localization accuracy, closely tracking the ground truth trajectories. For the MH_01_easy sequence, the RMS translational drift error was only 0.14m, and the rotational error stayed below 0.05rad over a 40m trajectory. The translational drift remained below 0.5% of the total distance traveled, a significant improvement over pure visual odometry methods which tend to accumulate large drift errors over time.

Furthermore, the proposed solution successfully handled several perceptually-challenging conditions encountered in the dataset, including rapid motions, aggressive rotations, and traversals through poorly lit and featureless regions. The filter maintained consistent performance, demonstrating the robustness benefits derived from fusing visual measurements with stability-enhancing inertial data.

These results validate our VIO system's effectiveness and accuracy in real-world scenarios, positioning it as a compelling solution for enabling robust and precise localization capabilities for next-generation autonomous robots and vehicles operating in GPS-denied environments.